Generate, Transfer, Adapt: Learning Functional Dexterous Grasping from a Single Human Demonstration

Jan 7, 2026·, ,,,,·

0 min read

,,,,·

0 min read

Xingyi He

Adhitya Polavaram

Yunhao Cao

Om Deshmukh

Tianrui Wang

Xiaowei Zhou

Kuan Fang

Abstract

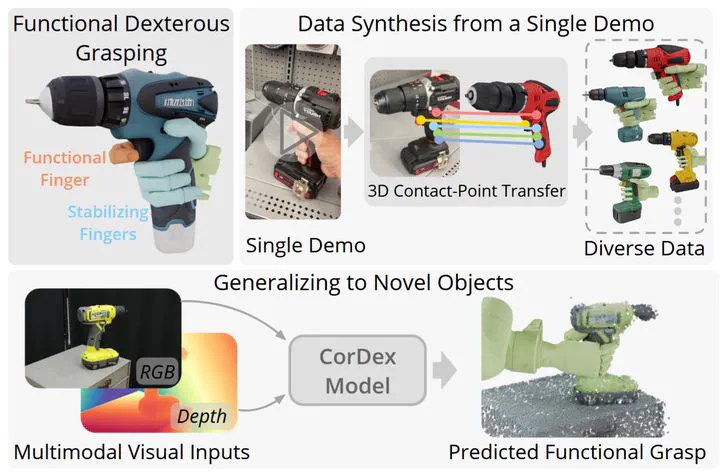

Functional grasping with dexterous robotic hands is a key capability for enabling tool use and complex manipulation, yet progress has been constrained by two persistent bottlenecks: the scarcity of large-scale datasets and the absence of integrated semantic and geometric reasoning in learned models. In this work, we present CorDex, a framework that robustly learns dexterous functional grasps of novel objects from synthetic data generated from just a single human demonstration. At the core of our approach is a correspondence-based data engine that generates diverse, high-quality training data in simulation. Based on the human demonstration, our data engine generates diverse object instances of the same category, transfers the expert grasp to the generated objects through correspondence estimation, and adapts the grasp through optimization. Building on the generated data, we introduce a multimodal prediction network that integrates visual and geometric information. By devising a local–global fusion module and an importance-aware sampling mechanism, we enable robust and computationally efficient prediction of functional dexterous grasps. Through extensive experiments across various object categories, we demonstrate that CorDex generalizes well to unseen object instances and significantly outperforms state-of-the-art baselines.

Type

Publication

In 2026 IEEE International Conference on Robotics & Automation